Redeploying tor bridges using Terraform and Ansible

Posted on vr 06 mei 2022 in webapps

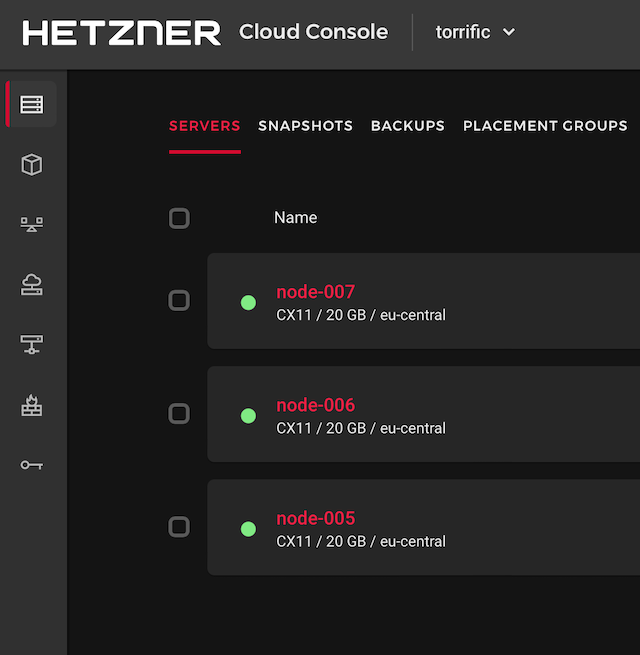

Torbridges redeployed - more dynamic this time.

Last year the Tor foundation tried to get more people to run Tor bridges. These are entry points for people that are unable to access the tor network via standard means. I deployed a bunch of them and they have been running for months. Trouble with the bridges is, that their IP might get reported and blocked in places where freedom of speech is not a given. So I deployed a bunch of bridges, which over time all might end up being blocked in large parts of the world where they are most needed.

The last time I deployed the tor bridges, I started with a couple and expanded soon thereafter. I already came to the conclusion that in retrospect it would have been good to improve automation by using terraform to deploy the virtual machines needed.

And that is exactly what I did this time, but with a twist. I created a shell script that I can run before the rest of the automation, that will increase a counter. That increase forces terraform to dispose of the machine with the lowest number and create a new machine with the highest: Voilà: one machine with a new public IP while the rest in the set stay stable. Rinse and repeat periodically and the public IP addresses that my setup uses change over time.

Steps in the provisioning proces

- shell script to increase the counter (optional)

- apply the terraform plan to (re)create virtual machines, DNS entries and zabbix monitoring

- sleep for 15 seconds to give Hetzner cloud time to spin up (new) virtual machines

- run the ansible playbook I created last November

- commit/store current terraform state in the git repository

Right now I'm running this with a small wrapper script from my laptop, but I intend to run it as a scheduled Gitlab CI pipeline later.

Offset script

This is the part that really could be better. But it does the job, so it will do for now. The script increases the counter and creates a Terraform variable containing a set of host numbers to (re)create.

#!/bin/bash

#

# Ad Hoc nodenames var generator that offsets the hostnames by one

# every run, effectively making terraform dispose of a node and creating

# a fresh node.

#

# This script probably is not needed after my terraform skills have improved

#

# Systeemkabouter Maljaars - 05-May-2022

#

if [[ -e offset.txt ]];

then

OFFSET=`cat offset.txt`

else

OFFSET=0

fi

NEWOFFSET=$(($OFFSET+1))

HOST1=$(printf "%03d" ${NEWOFFSET})

HOST2=$(printf "%03d" $(($NEWOFFSET+1)))

HOST3=$(printf "%03d" $(($NEWOFFSET+2)))

HOST4=$(printf "%03d" $(($NEWOFFSET+3)))

HOST5=$(printf "%03d" $(($NEWOFFSET+4)))

HOST6=$(printf "%03d" $(($NEWOFFSET+5)))

HOST7=$(printf "%03d" $(($NEWOFFSET+6)))

HOST8=$(printf "%03d" $(($NEWOFFSET+7)))

HOST9=$(printf "%03d" $(($NEWOFFSET+8)))

HOST0=$(printf "%03d" $(($NEWOFFSET+9)))

echo 'variable "nodenames" {' > nodenames.tf

echo 'type = set(string)' >> nodenames.tf

echo " default = [\"$HOST1\",\"$HOST2\",\"$HOST3\",\"$HOST4\",\"$HOST5\",\"$HOST6\",\"$HOST7\",\"$HOST8\",\"$HOST9\",\"$HOST0\"]" >> nodenames.tf

echo '}' >> nodenames.tf

echo $NEWOFFSET > offset.txt

This produces something like:

variable "nodenames" {

type = set(string)

default = ["005","006","007"]

}

That is a variable file Terraform will use to execute its plan.

Terraform bit

Hetzner has a decent Terraform provider for their Cloud setup. For Hetzner DNS and Zabbix integration I rely on third party providers.

Terraform manages the virtual machines, the DNS records and automatic Zabbix monitoring. It also produces an ansible inventory file with the current set of IP addresses the setup uses.

terraform {

required_providers {

hcloud = {

source = "hetznercloud/hcloud"

version = "1.33.1"

}

hetznerdns = {

source = "timohirt/hetznerdns"

version = "2.1.0"

}

zabbix = {

source = "claranet/zabbix"

version = "0.3.0"

}

}

}

# Define Hetzner provider

provider "hcloud" {

token = "${var.hcloud_token}"

}

provider "hetznerdns" {

apitoken = "${var.hetzner_dns_token}"

}

provider "zabbix" {

user = "${var.zabbix_user}"

password = "${var.zabbix_password}"

server_url = "${var.zabbix_server_url}"

}

# Obtain ssh key data

data "hcloud_ssh_key" "ssh_key" {

fingerprint = "72:aa:d6:a2:af:a9:ae:08:d3:db:f4:84:cd:21:40:b7"

}

resource "hcloud_server" "ubuntu2204" {

for_each = var.nodenames

name = "${format("node-%03d", each.value)}"

image = "ubuntu-22.04"

server_type = "cx11"

ssh_keys = ["${data.hcloud_ssh_key.ssh_key.id}"]

}

resource "hcloud_rdns" "master" {

for_each = var.nodenames

server_id = hcloud_server.ubuntu2204[each.value].id

ip_address = hcloud_server.ubuntu2204[each.value].ipv4_address

dns_ptr = "${format("node-%03d.example.com", each.value)}"

}

data "hetznerdns_zone" "dns_zone" {

name = "example.com"

}

resource "hetznerdns_record" "web" {

for_each = var.nodenames

zone_id = data.hetznerdns_zone.dns_zone.id

name = "${format("node-%03d", each.value)}"

value = hcloud_server.ubuntu2204[each.value].ipv4_address

type = "A"

ttl= 600

}

resource "zabbix_host_group" "torrific_group" {

name = "Torrific project hosts"

}

resource "zabbix_host" "demo_host" {

for_each = var.nodenames

host = hcloud_server.ubuntu2204[each.value].ipv4_address

name = "${format("Torrific node-%03d", each.value)}"

interfaces {

ip = hcloud_server.ubuntu2204[each.value].ipv4_address

main = true

}

groups = ["${zabbix_host_group.torrific_group.name}"]

templates = ["ICMP Ping"]

}

resource "local_file" "ansible_inventory" {

content = templatefile("inventory.tmpl",

{

hosts = hcloud_server.ubuntu2204

}

)

filename = "ansible/inventories/torrific/hosts"

}

Ansible bit

The ansible part is mostly unchanged from my previous blogpost on the subject. There were minor adjustments as I now use Ubuntu 22.04 as a base image instead of 20.04. Also, it uses the Terraform produced inventory file.

---

- hosts: tornodes

roles:

- { role: community/firewall, tags: ['firewall', 'baseline'] }

- { role: lutra-base, tags: ['lutra-base', 'baseline'] }

- { role: postfix, tags: ['postfix', 'baseline'] }

- { role: community/sshd, tags: ['sshd', 'baseline'] }

- { role: logcheck, tags: ['logcheck', 'application']}

- { role: backup, tags: ['backup', 'baseline'] }

- { role: zabbix_agent, tags: ['zabbix_agent', 'baseline'] }

- { role: tordaemon, tags: ['tordaemon', 'application']}

See my previous blogpost on the subject for more details.

Demo video

Below the Adhoc video I created.

After creating the video I destroyed all new machines and redeployed the set to get fresh IP addresses.