Database backups for mastodon.nl now use ZFS snapshots too

Posted on zo 02 juni 2024 in mastodonnl

As I detailed a couple of months back, the mastodon media store is ZFS based and snapshots are pushed offsite multiple times a day. For the database servers, we used a daily SQL dump that was sent offsite. Good enough, but could be better.

Recently we had a small incident where the primary SQL server ran out of disk capacity. This was quickly resolved, but it left the replication to the second SQL server as paused/dead. Up till now this second server was only used as a second onsite copy of all the data. Nice, but not really critical.

Today I wanted to fix this replication. But as I had to recreate the setup anyway, I decided this was a good time to introduce ZFS as storage for the second SQL server. So this is wat happended. I forcefully formatted the existing ext4 filesystem as a ZFS pool (after checking I was on the proper server twice - thank you!).

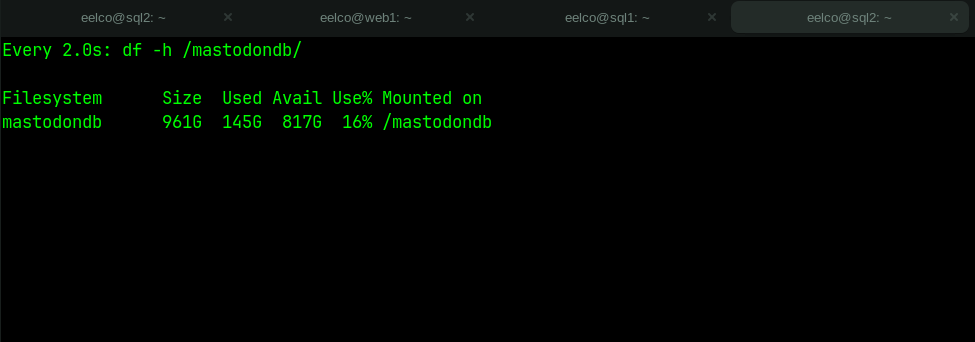

The new pool is now mounted as /mastodondb on the second SQL server, so data now lives in /mastodondb/15/main instead of /var/postgres-data/15/main. A symlink will guide lost administrators and processes to the new location, but the datadir property in /etc/postgresql/15/postgres.conf was updated to point to the new location.

After that I setup the replication bit again using the pg_basebackkup command:

postgres@sql2:~$ pg_basebackup -h 192.168.25.10 -U replication --checkpoint=fast -D /mastodondb/15/main -R --slot=sql2 -C

right now still awaiting the 300 GB of data to get replicated:

As I was waiting, I took the shell script to push snapshots from the media storage system and adjusted it slightly for the SQL server offsite backups:

#!/bin/bash

ZFS_POOL=mastodondb

function report()

{

/usr/bin/logger "$1"

/usr/bin/echo "$1"

}

LAST_SNAPSHOT=`/usr/sbin/zfs list -t snapshot ${ZFS_POOL} | /usr/bin/tail -1 | /usr/bin/awk '{ print $1; }'`

NEXT_SNAPSHOT="${ZFS_POOL}@`date +%F-%H-%M`"

report "Creating snapshot ${NEXT_SNAPSHOT} and pushing delta with ${LAST_SNAPSHOT} offsite"

/usr/sbin/zfs snapshot ${NEXT_SNAPSHOT}

/usr/sbin/zfs send -vi ${LAST_SNAPSHOT} ${NEXT_SNAPSHOT} | ssh USER@REDACTED zfs receive backups/${ZFS_POOL}

if [[ $? > 0 ]];

then

report "Pushing snapshot failed, bummer"

else

report "Finished pushing snapshot offsite"

fi

report "cleanup old snapshots"

/usr/sbin/zfs list -t snapshot ${ZFS_POOL} -o name | /usr/bin/grep ${ZFS_POOL} | /usr/bin/tac | /usr/bin/tail -n +21 | /usr/bin/xargs -n 1 /usr/sbin/zfs destroy

This script is 'good enough', but does not handle the initial push of the first snapshot. So I did this manually. After that I created a cron entry for this script and set up ssh key auth with the backup target.

/usr/sbin/zfs send -v mastodondb@2024-06-02-06-36 | ssh USER@REDACTED zfs receive backups/mastodondb

Now it is just waiting for the initial sync from SQL1 to SQL2 to finish. Maybe next week I can test running a postgres server in my home lab based on the offsite snapshots to verify these backups are usable.